Project X: Begin

Hello friends. Today, we’re going to make a web hosting platform.

Now, you must be wondering… why in the world would you do that? Beyond an excuse to use my soon-to-be-legacy programming capabilities, I sat and reflected, jeez, why am I even on this website still? This website.

I don’t know. I won’t know, but I’m still here. So I thought, well, instead of being here why not doodle something?

The best advice would be to sit still and become the breeze, but maybe you could consider typing and listening to experimental post-rock and breakcore as another meditative retreat.

But upon more reflection, I must admit, after listening to goreshit on repeat for weeks, I’ve been thinking a little too much lately. Too convinced otherwise with all the wiring and audio generation or some uncomfortable attachment to such phantoms coming through the screen, fanning through the next releases for another hit.

As I drag my hands along some Barceloní shore I realize that I may as well make another sandcastle.

And what better way to do something than to write about it? I am betting that, if I keep writing, the website will make itself. That, in some ways, the website exists purely because it’s something to type. It’s better than typing the usual things I write.

Let’s begin!

The Beginning

We’re going to make this with Elixir and Phoenix. For those unfamiliar, Elixir is a programming language and Phoenix is a web framework — that is, a “scaffold” on how to create a dynamically rendered website. Dynamically rendered is where you generate the webpage upon request rather than serve the pre-made HTML like you do on neocities. This allows for many fun things, like user profiles and ways to destroy their sense of reality, seduce them with people they’ll never know, taking up Dunbar slots, just a slice of a profile wishing to be anyone else. Make a cult if you want!

Now, if you’re following along hoping to make your own website-host one day, fret not! You only have to let me know and I will go in a more pedagogical detail — setting up your environment and whatever else. But, I imagine, purveyor you are, some flaneur of the neural nets devouring each street you hop along, vaguely disowned in the global meat market — so I imagine you’re here out of a over-the-hedge curiosity, nothing less. What happens next?

The first command will be:

mix phx.new <project_name>This will create everything we’ll need to begin. See, after awhile you realize opinions don’t matter. Yours certainly don’t. The only thing that matters are results, even if it requires sacrificing an entire people for the GDP and pension ponzis. This is why you use a framework: to accelerate your ponzis. I’m not sure what to call the project yet, so if you have any suggestions for amusing URLs, feel free to drop. For now, let’s just call it “project”.

mix phx.new projectLet’s check out the folder. I will add notes on what everything does, and why.

cd project/

tree -L 2 .

.

├── _build # Build artifacts, like after you compile the

│ ├── dev # program.

├── assets # All things needed for the UI, as you can tell

│ ├── css # from the folder labels.

│ ├── js

│ ├── node_modules

│ ├── package-lock.json

│ ├── package.json

│ ├── tsconfig.json

│ └── vendor

├── config # Web applications usually have different

│ ├── config.exs # "environments" like dev, test, prod, and their

│ ├── dev.exs # own configs.

│ ├── prod.exs

│ ├── runtime.exs

│ └── test.exs

├── deps # This folder contains all the Elixir packages

# needed to run Phoenix. I've omitted them.

├── lib

│ ├── project # A Phoenix project is split up in Contexts --

│ ├── project_web # application logic (a.k.a. interfacing with the

│ ├── project_web.ex # database) goes under "project", while

│ └── project.ex # "project_web" holds all the stuff for HTML

├── mix.exs # The primary config file for any Elixir project,

# like package.json in npm. Where deps are added.

├── mix.lock

├── priv # This folder ships with the final compiled

│ │ # "release" (the live website)

│ ├── repo # Database stuff.

│ └── static # Compiled assets or images or anything else.

├── README.md

└── test # You want to test your code to build confidence —

├── project_web # shipping broken code is painful to deal with

├── support

└── test_helper.exsJust a high level overview. It’s okay if you don’t understand any of it.

The first time I went through a web application book was the famous Ruby on Rails one. Went through the whole book and forgot everything. But you could be convinced it meant something.

Anyway, let’s zero in on that lib/ folder, as that’s where we’ll be spending most of our time. It also houses all the code that processes an HTTP request.

tree lib/

lib

├── project

│ ├── application.ex # Entry point; spins up all the

│ │ # dependency applications we depend on.

│ ├── mailer.ex # Email stuff.

│ └── repo.ex # Database connection.

├── project_web

│ ├── components # HTML snippets.

│ ├── controllers

│ │ ├── error_html.ex

│ │ ├── error_json.ex

│ │ ├── page_controller.ex # Loads data from DB and passes it to the

│ │ │ # HTML template.

│ │ └── page_html.ex # HTML template functions using DB data

│ │ # from the controller.

│ │

│ ├── endpoint.ex # First file to handle requests,

│ │ # forwards to the router.

│ ├── router.ex # Maps URLs to controllers.

│ └── telemetry.ex

├── project_web.ex # Entry point of the Project context

│ # (database functions).

└── project.ex # Entry point of the ProjectWeb context

# (HTTP handling and HTML generation).Let’s go over the lifecycle of a request in a Phoenix application. You first get a request for a particular URL, like neocities.org/site/<user>, and the endpoint.ex as well router.ex attach additional information to the request as well as figure out what code needs to run to give the request the needed HTML. These chunks of code the router.ex invoke are called “controllers” and in each controller, like page_controller.ex you load all the data from the database, then pass it to the HTML template generators, e.g. page_html.ex. If it all goes well, we send the generated HTML.

It’ll make more sense as we continue to build.

Anyway, let’s go into a long diatribe about the state of the web development.

Web Development Paradigms

You may be well aware, but there are at least ~500 different configurations for spinning up a web server. It’s a plug-and-play endeavor, for each layer you have to deal with.

You can change the database you use, the database connection, caching, routing, background jobs, authentication, session or cookie strategies, deployment strategy, programming languages of course, etc. And for every thing you choose so there are trade offs.

As you can imagine, for those who tend toward thinking they can find some “holy grail” will find themselves wandering the desert mirage. This is reflected in the macro trends of web development, actually! Back in ~2005 we were doing the above, what I described, (it’s called MVC) and you generate all the HTML on the server.

Then JavaScript got good enough to render HTML in your browser, and the server sends the data in a different format (usually JSON).

Now the big buzz is going back to rendering on the server as well as rendering in the browser. Arguments range from speed to better metrics when it comes to getting users to stay on the app. In any case, it’s gotten really complicated.

One could find some rebellion in this complicated status quo by adopting Rails, which still renders everything on the server and injects snippets on the page depending on the buttons clicked. There’s also Phoenix LiveView, which does the same in more interesting machinery.

The difficulty with the above is that the complexity and expectations of UI have gone up dramatically, some only possible through JavaScript. And while you can continue along adding small blocks of JavaScript here and there to match these websites that use JavaScript everywhere, you’re stuck doing ad-hoc imperative attachments to arbitrary HTML tags and figuring out how it all looks in the end can get complicated. Is it possible? Absolutely. Can you reach the same level of UI/UX than those with full on JavaScript rendering? I’m not sure, honestly.

Thus, as was the whole point of this diatribe, we’re getting rid of page_html.ex in the Phoenix request lifecycle and instead inserting JavaScript rendering. Just for kicks, we’ll do the same as all the full-on JavaScript frameworks do: we’ll render on the server as well as on the browser.

Elixir makes this easy, as you can spin up another longstanding process under application.ex.

So the new lifecycle will be controller -> call nodejs to render the HTML -> send to the browser -> browser then “hooks up” the rest of the JavaScript. Is it complicated? Yes. But I’m curious to see if it makes developing the website easier in the long run. If it doesn’t then that’s the lesson to take.

To achieve these objectives, we’ll be using a library from the Laravel ecosystem. This allows us to use Phoenix for everything except for the actual HTML rendering. For that, we’ll use React.

Thus, for the last bit of the web request cycle, instead of something like this:

# lib/project_web/controllers/page_controller.ex

defmodule ProjectWeb.PageController do

use ProjectWeb, :controller

def home(conn, _params) do

render(conn, :home, text: "Hello World!")

end

endAnd how it points to our page_html.ex:

# lib/project_web/controllers/page_html.ex

defmodule ProjectWeb.PageHTML do

@moduledoc """

This module contains pages rendered by PageController.

"""

use ProjectWeb, :html

def home(assigns) do

~H"""

<main>

<h1>Welcome to our web hosting platform.</h1>

<div>{@text}</div>

</main>

"""

end

endWe’ll now do something like:

# lib/project_web/controllers/page_controller.ex

defmodule ProjectWeb.PageController do

use ProjectWeb, :controller

def home(conn, _params) do

conn

|> assign_prop(:text, "Hello world")

|> render_inertia("Home")

end

endBy calling render_inertia("Home") we’ll fetch the corresponding React page and throw :text in there:

import React from "react"

import { Head } from "@inertiajs/react"

function Home(props: { text: string }) {

const { text } = props

return (

<main>

<Head title="Home" />

<h1>Welcome to our web hosting platform.</h1>

<small>{text}</small>

</main>

)

}

export default HomeThis now allows us to have full access to JavaScript whenever we need it when composing parts of the website. There are downsides to this of course. It does make the deployment story a little more complicated, due to caching or stale clients. The testing story isn’t that great compared to native Phoenix, too. But we’ll see if we can scale past these shortcomings.

Anyway, the cool thing is how easy it is to add some JavaScript stuff now:

function Home() {

const [count, setCount] = useState(0)

return (

<main>

<h1>Welcome to our web hosting platform.</h1>

<button onClick={() => setCount(count + 1)}>{count}</button>

</main>

)

}For comparison, here is doing the same thing in Stimulus, a library used in Rails to attach JavaScript to HTML:

We’d render this from whatever web server you’d like to use:

<main data-controller="count">

<h1>Welcome to our web hosting platform.</h1>

<button data-action="click->count#update" data-count-target="button">0</button>

</main>And then this code would attach, stored away in a separate JavaScript file:

import { Controller } from "@hotwired/stimulus"

export default class extends Controller {

static targets = ["button"]

connect() {

this.count = 0

}

update() {

this.count += 1

this.buttonTarget.innerText = this.count

}

}Okay, the diatribe is over. It’s okay if you skip all of it. Anyway, we now have everything wired up.

What’s the first thing that we need to do?

I think creating log in and user accounts seems like the best way to go about things.

Authentication

This is the first time I’m using this Inertia stuff, so we’ll both learn along the way. And it’s been awhile since using React, and hopefully I don’t have rose-colored glasses on because maybe this isn’t a good idea, but anyway.

Phoenix comes with an auth generator out the box. Let’s run it:

mix phx.gen.auth Accounts User usersAccounts is our “context” and User is a submodule of that context. users is the table name within Postgres.

I would show you everything it generates but it generates a lot. A quick summary is that it adds login/logout pages, settings, email handling, a User module and Accounts module, as well as a Scope module which we can attach information about the user to (e.g. if they are an admin, for example).

I don’t think there’s any harm in leaving the HTML as is, but it’d be cool to later convert them to Inertia pages just to see how it works. May as well.

Let’s look at the registration controller that was generated:

# lib/project_web/controllers/user_registration_controller.ex

defmodule ProjectWeb.UserRegistrationController do

use ProjectWeb, :controller

alias Project.Accounts

alias Project.Accounts.User

def new(conn, _params) do

changeset = Accounts.change_user_email(%User{})

render(conn, :new, changeset: changeset)

end

def create(conn, %{"user" => user_params}) do

case Accounts.register_user(user_params) do

{:ok, user} ->

{:ok, _} =

Accounts.deliver_login_instructions(

user,

&url(~p"/users/log-in/#{&1}")

)

conn

|> put_flash(

:info,

"An email was sent to #{user.email}, please access it to confirm your account."

)

|> redirect(to: ~p"/users/log-in")

{:error, %Ecto.Changeset{} = changeset} ->

render(conn, :new, changeset: changeset)

end

end

endWhat we want to do is switch this over to using a React page. Two things we’ll need to check is how the changeset is serialized and how errors are propagated. Sometimes the best way to figure that out is to get an error first:

Thus, let’s add our React page first, call it the SignUp.tsx. I’ve basically lifted the generated HTML to start:

// assets/js/pages/SignUp.tsx

import { Head } from "@inertiajs/react"

function SignUp(props) {

return (

<>

<Head title="Sign Up" />

<div className="mx-auto max-w-sm">

<header className="text-center">

Register for an account

<p>

Already registered?

<a href={"/users/log-in"} className="font-semibold text-brand hover:underline">

Log in

</a>

to your account now.

</p>

</header>

<form method={"/users/register"}>

<input type="email" autoComplete="username" required />

<button className="w-full">Create an account</button>

</form>

</div>

</>

)

}

export default SignUpThen we update the controller action:

# lib/project_web/user_registration_controller.ex

def new(conn, _params) do

render_inertia(conn, "SignUp")

endSeeing as it’s a sign up page, we don’t have any data we need to send from the database. You may have noticed we removed one line:

changeset = Accounts.change_user_email(%User{})This was to help fill in our form within our server side HTML generators, but we don’t need it now since we’ll just use React.

import { Head, useForm } from "@inertiajs/react"

import { type FormEvent } from "react"

type SignUpForm = {

email: string

}

function SignUp() {

const { data, setData, post, processing, errors } = useForm<SignUpForm>({

email: "",

})

function submit(e: FormEvent<HTMLFormElement>) {

e.preventDefault()

post("/users/register")

}

return (

<>

<Head title="Sign Up" />

<div className="mx-auto max-w-sm">

<header className="text-center">

Register for an account

<p>

Already registered?

<a href={"/users/log-in"} className="font-semibold text-brand hover:underline">

Log in

</a>

to your account now.

</p>

</header>

<form onSubmit={submit}>

<input

type="text"

value={data.email}

onChange={(e) => setData("email", e.target.value)}

/>

{errors.email && <div>{errors.email}</div>}

<button type="submit" disabled={processing} className="w-full">

Create an account

</button>

</form>

</div>

</>

)

}

export default SignUpI’ll do the same for the SignIn page and other stuff generated. There’s nothing too interesting about this conversion process, so I’ll come back here when it’s done.

Oh, there is one thing though.

So a cool thing about Phoenix is that you can think a web request as a chain of functions, they’re called Plugs but they’re basically functions.

Thus, you can create “pipelines” depending on the request type. This also helps when it comes to authorization. We want to make sure you already logged in before you access your home page.

## lib/project_web/router.ex

## Authentication routes

scope "/", ProjectWeb do

pipe_through [:browser, :redirect_if_user_is_authenticated]

get "/users/register", UserRegistrationController, :new

post "/users/register", UserRegistrationController, :create

end

scope "/", ProjectWeb do

pipe_through [:browser, :require_authenticated_user]

get "/users/settings", UserSettingsController, :edit

put "/users/settings", UserSettingsController, :update

get "/users/settings/confirm-email/:token", UserSettingsController, :confirm_email

end

scope "/", ProjectWeb do

pipe_through [:browser]

get "/users/log-in", UserSessionController, :new

get "/users/log-in/:token", UserSessionController, :confirm

post "/users/log-in", UserSessionController, :create

delete "/users/log-out", UserSessionController, :delete

endIf you look at the pipe_through under each scope, runs all the attached functions we want on the connection before passing it off to any of the controllers. For an example of what these functions look like, let’s check out :require_authenticated_user:

Side note: In Elixir, functions are referenced in at least three different ways. You have the classic, which is what you’d see in most other languages,

my_cool_function(). In Elixir, we have these things called atoms, they’re unique symbols, and they’re written with the:in front. Functions in elixir are stored atoms, i.e.:my_cool_function. Finally, you may see some strange syntax like&my_cool_function/0— this is a shorthand for capturing a function to pass it around without yet invoking it, i.e.

anonymous_fn = fn -> my_cool_function() end.It doesn’t matter if you understand any of this, but it warrants a subpar explanation.

# lib/project_web/user_auth.ex (auto-generated earlier)

@doc """

Plug for routes that require the user to be authenticated.

"""

def require_authenticated_user(conn, _opts) do

if conn.assigns.current_scope && conn.assigns.current_scope.user do

conn

else

conn

|> put_flash(:error, "You must log in to access this page.")

|> maybe_store_return_to()

|> redirect(to: ~p"/users/log-in")

|> halt()

end

endThis allows us to check if a user is authenticated before accessing a route.

We can modify this function to add the user and later access it in the front end:

if user = get_in(conn.assigns.current_scope.user) do

conn

|> assign_prop(:user, user)

else

# ...

end Now every route that invokes :required_authenticated_user will have the user javascript object under props.

To make it so user can be passed to the front end, we need to let the JSON serialization protocol know which fields are okay to serialize by using @derive:

# lib/project/accounts/user.ex

defmodule Project.Accounts.User do

use Ecto.Schema

import Ecto.Changeset

@derive {Jason.Encoder, only: [:id, :email, :confirmed_at]}

schema "users" do

field :email, :string

field :password, :string, virtual: true, redact: true

field :hashed_password, :string, redact: true

field :confirmed_at, :utc_datetime

field :authenticated_at, :utc_datetime, virtual: true

timestamps(type: :utc_datetime)

end

# ...

endLet’s check it out on the Home page:

// assets/js/pages/Home.tsx

import React, { useState } from "react"

import { Head } from "@inertiajs/react"

import Layout from "@/layouts/Default"

import { User } from "@/types"

function Home(props: { text: string; user: User }) {

const { text, user } = props

const [count, setCounter] = useState(0)

return (

<main>

<Head title="Home" />

<h1>Welcome to our web hosting platform, {user.email}.</h1>

<small>{text}</small>

<button onClick={() => setCounter(count + 1)}>{count} +</button>

</main>

)

}

Home.layout = (page: React.ReactNode) => <Layout>{page}</Layout>

export default HomeAnd it renders.

Anyway, I’m going to migrate the rest of the generated files to React and come back if I come across anything more novel.

Okay, everything is migrated. One issue I ran into was understanding how Inertia’s useForm works. It stores the previous state.

There are a lot of options you can pass within Inertia so it can be confusing.

Anyway.

Let’s move on.

What does MVP Look Like?

To reach MVP, we need a few things.

For starters, one needs to be able to upload/download files from whatever host we end up choosing here.

Let’s take inspiration from neocities/nekoweb and set up a feed of websites updating. There are a few improvements we could make here.

Anyway, we have Users, we need Website, SiteUpdate, and that’s it for now.

So I would consider it interesting enough to deploy if you could

- open an editor pane with your website, save

- create a

SiteUpdatedepending on timelapse - have a subdomain route to the newly created website

That’s it. There are a lot of interesting things we can explore after we get this V1 done, but that’s for later.

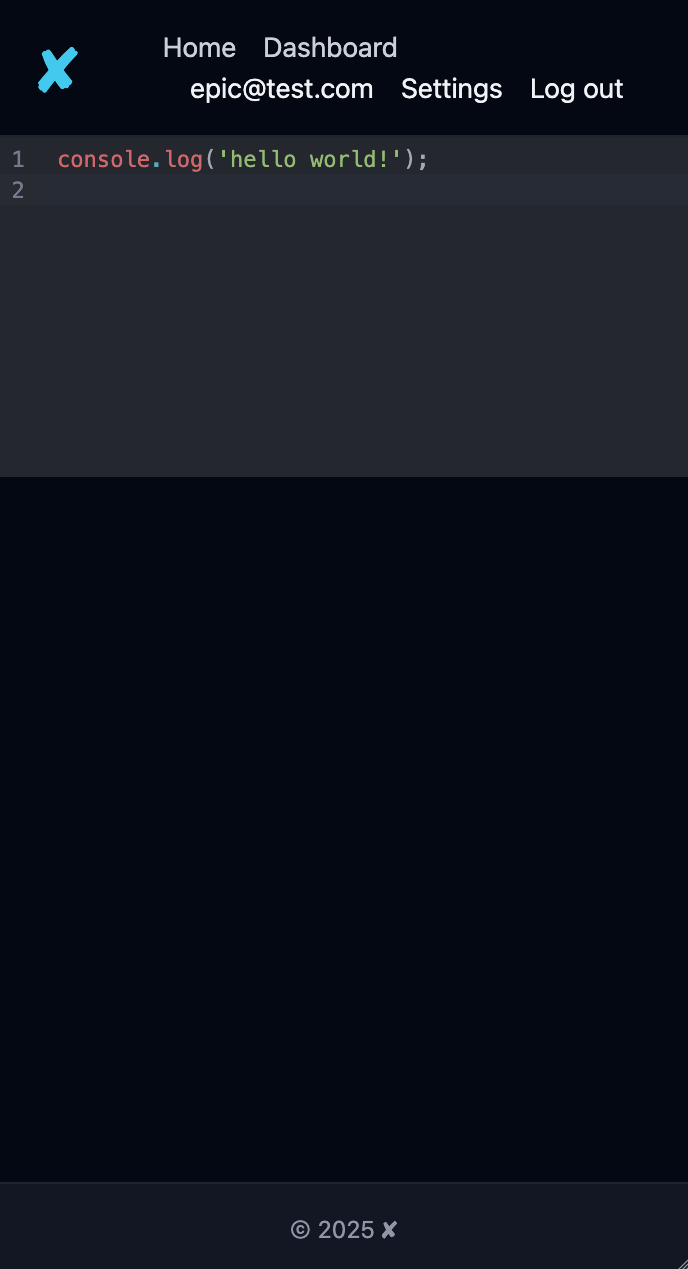

Editor view

Alright, well, let’s create an Editor page. We’ll be using CodeMirror because why not?

I navigate over to the assets/ and run npm i codemirror @codemirror/lang-javascript @uiw/react-codemirror.

Then I copy-paste the recommended beginning setup:

import React, { useState } from "react"

import Layout from "@/layouts/Default"

import CodeMirror, { ViewUpdate } from "@uiw/react-codemirror"

import { javascript } from "@codemirror/lang-javascript"

function Editor() {

const [value, setValue] = React.useState("console.log('hello world!');")

const onChange = React.useCallback((val: string, viewUpdate: ViewUpdate) => {

console.log("val:", val, "viewupdate:", viewUpdate)

setValue(val)

}, [])

return (

<CodeMirror

value={value}

height="200px"

extensions={[javascript({ jsx: true })]}

onChange={onChange}

theme={"dark"}

/>

)

}

export default EditorNow just need to wire up the routing.

# lib/project_web/router.ex

scope "/", ProjectWeb do

pipe_through [:browser, :require_authenticated_user]

get "/", PageController, :home

get "/editor", PageController, :editor

get "/users/settings", UserSettingsController, :edit

put "/users/settings", UserSettingsController, :update

get "/users/settings/confirm-email/:token", UserSettingsController, :confirm_email

endWe’ll probably create an EditorController later for code organization, but for now I’ll just reuse the PageController.

# lib/project_web/controllers/page_controller.ex

def editor(conn, _params) do

conn

|> render_inertia("Editor")

endWe’ll be loading which file we’re editing within the above later on. I’m not exactly sure yet how our app and its files will interact (since those files will be stored elsewhere) but that’s for another day.

Let’s see what happens when we hit the url.

Cool. That works! Now when we click “save” it should update the file.

Before that, one thing bothering me was how Tab did not select the autocompletion. Additionally, web native APIs (like console) were not showing up.

After some research I found I had to add these lines:

const windowCompletions = javascriptLanguage.data.of({

autocomplete: scopeCompletionSource(globalThis),

})

<CodeMirror

extensions={[

javascript({ jsx: true }),

keymap.of([{ key: "Tab", run: acceptCompletion }, indentWithTab]),

autocompletion(),

windowCompletions,

]}

/>Perfect!

Four hours later

I got sidetracked with how to clean up the autocomplete more.

Some of the properties on document like document.body weren’t showing up because they’re dynamically defined I guess.

Anyway I have to figure out how to filter some undesirable results, like react internals and duplicates. I only noticed like two cases of duplicates though, so it should be fine.

const documentAutocomplete = javascriptLanguage.data.of({

autocomplete: (context: CompletionContext) => {

const word = context.matchBefore(/(?:document)\.\w*/)

if (!word) return null

const unwanted = ["_react", "location"]

const prefix = word.text.split(".")[1] || ""

const keys = getAllPropertyNames(document)

const options = keys

.filter((key) => key.startsWith(prefix) && !unwanted.some((un) => key.includes(un)))

.map((key) => ({

label: key,

type: typeof (document as any)[key] === "function" ? "function" : "property",

}))

return {

from: word.from + "document.".length,

options,

validFor: /^\w*$/,

}

},

})And then we want to add the rest of the autocomplete extensions on startup.

useEffect(() => {

const unwanted = ["_reactListening"]

const base = scopeCompletionSource(globalThis)

const windowCompletions = javascriptLanguage.data.of({

autocomplete: async (ctx: CompletionContext) => {

const result = await base(ctx)

if (!result) return null

return {

...result,

options: result.options.filter((opt) => !unwanted.some((un) => opt.label.includes(un))),

}

},

})

setExtensions([...extensions, [windowCompletions, documentAutocomplete]])

}, [])It was a big waste of time, but at least it autocompletes correctly (for now):

Anyway, now that we’re here, the next step is to save and load in files. Maybe add a file tree.

The rough plan is to use some form of S3 storage for production, so I wondered whether it was worth trying to replicate it locally. You can install MinIO locally and test against that. Instead, I decided that it’s best to use the file system locally (and for tests) and later swap it out for S3.

So after thinking about the database design for a bit, I’ve landed on this:

create table websites (

id bigint not null,

owner_id bigint references users(id) on delete cascade,

subdomain text unique not null,

name text,

inserted_at timestamp default now(),

updated_at timestamp default now()

);

create table site_updates (

id bigint not null,

website_id bigint references websites(id) on delete cascade,

title text,

description text,

inserted_at timestamp default now()

-- later we'll add more info to this, like

-- type text

);

create table files (

id bigint not null,

path text not null,

mime_type text, -- for filtering and serving

size integer, -- reduce calls to storage to check total size

inserted_at timestamp default now(),

updated_at timestamp default now()

);I think this is all we need to get started. I’m no database wizard though, there’s probably a better way to go about it. Let’s translate the above to elixir.

To develop any database you do a “migration” to alter the schema of the database. Some web frameworks allow you to define these migrations in its own language:

mix ecto.gen.migration create_websitesThe above command generates a migration file which you can then fill in:

# priv/repo/migrations/20250604174621_create_websites.exs

defmodule Project.Repo.Migrations.CreateWebsites do

use Ecto.Migration

def change do

end

endAnd here it is filled in:

Two hours later

I did a bunch of research on UUIDs and was reminded how Stripe does IDs and, after a bit more thought, I switched to doing simple integer IDs because it’s easier, and, more importantly, easier to query the database without necessarily compromising on performance. Ideally it’d be nice to have the stripe ids but I am not sure how much trouble it’d cause in performance. You can serialize and deserialize from stripe ids to UUIDs but the mismatch between debugging application and database would be annoying.

Anyway, a cool thing you can do instead of writing all of these modules and migrations out is just generate them:

mix phx.gen.context Hosting Website websites subdomain:string name:string owner_id:references:usersThe files generated aren’t perfect, but enough that only minimum modifications are needed. It reminded that I prefer to use microsecond precision in the database, so I had to make sure it does that in the config:

config :project,

ecto_repos: [Project.Repo],

generators: [timestamp_type: :utc_datetime_usec]

config :project, Project.Repo,

migration_timestamps: [type: :timestamptz],

after_connect: {Postgrex, :query!, ["SET TIME ZONE 'UTC';", []]}Two hours later

I also ended up doing a ton of research on timestamps. The defaults of Phoenix exclude timezones. I don’t see why we can’t add them, along with microsecond precision.

Anyway, in order to use timestamps, when doing generations, you just need to convert all :utc_datetime_usec to :timestamptz in your migration files.

One cool thing I discovered is the ability to use the built-ins of Ecto with timestamptz. It’s the line up there, with after_connect: so that when Ecto truncates by applying a ::timestamp type to its generated functions, it’ll still work.

Anyway, now let’s generate our Site context and Website schema.

mix phx.gen.context Hosting Website websites subdomain:string name:string owner_id:references:usersWhich generates this migration:

defmodule Project.Repo.Migrations.CreateWebsites do

use Ecto.Migration

def change do

create table(:websites) do

add :subdomain, :string

add :name, :string

add :owner_id, references(:users, on_delete: :delete_all)

timestamps(type: :timestamptz)

end

create unique_index(:websites, [:subdomain])

create index(:websites, [:owner_id])

end

endI decided to call the foreign key the owner. Maybe later we’d have a bunch of people editing one website, but even then it makes sense for one person to be the owner, or not? Another day.

You can also see we’re creating indices on the tables for quick database querying.

Two more hours of research

So I think I’ll go with a “multi-tenant” approach out of the box. That is, a user can be a part of multiple websites and, the key here, multiple users can belong to a website and mess around with it depending on their permissions.

We just need to remove the :owner_id and put that in another table.

We’ll call that table the WebsiteMembership table.

defmodule Project.Hosting.WebsiteMembership do

use Ecto.Schema

import Ecto.Changeset

@roles ~w(admin manager member)a

schema "website_memberships" do

field :role, Ecto.Enum, values: @roles, default: :member

belongs_to :user, Project.Accounts.User

belongs_to :website, Project.Hosting.Website

timestamps(type: :utc_datetime_usec)

end

@doc false

def changeset(membership, attrs, user_scope) do

membership

|> cast(attrs, [:role])

|> put_change(:user_id, scope.user.id)

|> put_change(:website_id, scope.website.id)

|> validate_required([:role, :user_id, :website_id])

|> unique_constraint([:user_id, :website_id])

end

endGreat. Let’s make sure we create a website for each user when they register.

Two more hours

So Phoenix recently added this “Scope” concept which is fantastic because we’re going to need it for our multiple users <-> multiple websites.

But I’ve been a bit bothered by how the generator goes about it. Sometimes it’s easy to fixate on what’s the Correct Way to do something, instead of asking What Needs To Be Done.

All Scopes do is allow you to check “is this request authorized” or “what resource am I creating for who” and go from there. So the whole point here is to not sweat it.

A couple of things we need to do here.

For one, we need to serialize the scope so we can render the actual website visited.

# lib/project_web/user_auth.ex

def require_authenticated_user(conn, _opts) do

if get_in(conn.assigns.current_scope.user) do

conn

|> assign_prop(:scope, conn.assigns.current_scope)

else

# ...We also need to add what fields are okay to serialize:

# lib/project/hosting/website_membership.ex

defmodule Project.Hosting.WebsiteMembership do

# ...

@derive {Jason.Encoder, [:id, :role]}

# ...

end

# lib/project/hosting/website.ex

defmodule Project.Hosting.Website do

# ...

@derive {Jason.Encoder, only: [:id :subdomain, :name]}

# ...

endFinally, we need to serialize the scope itself, as well as add both the Website and WebsiteMembership to it:

# lib/project/accounts/scope.ex

defmodule Project.Accounts.Scope do

alias Project.Accounts.User

alias Project.Hosting.{Website, WebsiteMembership}

@derive {Jason.Encoder, only: [:user, :website, :membership]}

defstruct user: nil, website: nil, membership: nil

def for_user(%User{} = user), do: %__MODULE__{user: user}

def for_user(nil), do: nil

def put_website(%__MODULE__{} = scope, %Website{} = website) do

%{scope | website: website}

end

def put_membership(%__MODULE__{} = scope, %WebsiteMembership{} = membership) do

%{scope | membership: membership}

end

endThe %__MODULE__ syntax aliases to the current module, or, in this, Scope.

So now we can access it on that on the frontend which we’ll do soon. We also have our scope mostly set up. As we build out functions we can make sure a user is authorized, or which site they’re working on, etc.

I know the original point of all of this was to get a file and save it, but now that we’re doing multi-tenancy let’s create a website portal to all the websites you are a part of.

After that’s done, then we’ll start adding Hosting.File and Hosting.SiteUpdate. Maybe WebsiteMembership should be renamed to SiteMembership. Oh well.

Once Hosting.File is added, and we create the necessary functions to load and save files (from remotes with an API, too), then we can finally wire up that “Save” button in the editor view.

Ideally we make it so files are selectable in the sidebar, make it multiplayer… etc. But there’s the current roadmap. I think I’ll publish this when that roadmap is done, maybe, or maybe not.

So yeah, back to the website portal. It’s not a coincidence that all of this backend plumbing took up so much time: it’s easy to get lost in the weeds. I’m still thinking about doing stripe ids, honestly.

But when you build the frontend and backend in tandem you get a clear idea on what needs to happen next.

Website portal

So let’s make a website portal. We need an authenticated user. Let’s make the url /websites.

# lib/project_web/router.ex

defmodule ProjectWeb.Router do

use ProjectWeb, :router

# ...

scope "/", ProjectWeb do

pipe_through [:browser, :require_authenticated_user]

get "/", PageController, :home

get "/editor", PageController, :editor

get "/websites", WebsiteController, :index

get "/users/settings", UserSettingsController, :edit

put "/users/settings", UserSettingsController, :update

get "/users/settings/confirm-email/:token", UserSettingsController, :confirm_email

end

# ...

endLet’s create that WebsiteController. Honestly, I’m going to make it SiteController instead and rename everything to Site because Website is too long. One moment.

Okay, we now have Hosting.Site and Hosting.SiteMembership.

Let’s create the SiteController.

# lib/project_web/controllers/site_controller.ex

defmodule ProjectWeb.SiteController do

use ProjectWeb, :controller

alias Project.Hosting

def index(conn, _params) do

sites = Hosting.list_sites()

conn

|> assign_prop(:sites, sites)

|> render_inertia("Sites/Index")

end

endWe’ll need to modify the list_sites to scope it to what the user is a part of.

# lib/project/hosting.ex

defmodule Project.Hosting do

# ...

def list_sites(%Scope{} = scope) do

from(s in Site)

|> join(:inner, [s], m in SiteMembership,

on: m.site_id == s.id and m.user_id == ^scope.user.id

)

|> Repo.all()

# ...

end

endFrom all the available sites, get the ones where the user is a member. Let’s update the controller.

# lib/project_web/controllers/site_controller.ex

defmodule ProjectWeb.SiteController do

use ProjectWeb, :controller

alias Project.Hosting

def index(conn, _params) do

scope = conn.assigns.current_scope

sites = Hosting.list_sites(scope)

conn

|> assign_prop(:sites, sites)

|> render_inertia("Sites/Index")

end

endCool. Now let’s actually render it by creating a new React page. To do that, we need to define the types. I haven’t shown you this whole file yet, so here you go:

// assets/js/types.ts

export type User = {

id: number

confirmed_at: Date

email: string

}

export type Site = {

id: number,

subdomain: string,

name: string

}

export type Role = "admin" | "manager" | "member"

export type Membership = {

id: number,

role: Role

}

export type Scope = {

user?: User

site?: Site

membership?: Membership

}

export type Flash = Record<string, string>I’m thinking of changing SiteMembership to just Membership because it seems fine enough. Actually, we can scope it under Site and it’ll work. One moment.

I instead just made it Membership. Let’s continue.

// assets/js/pages/Sites/Index.tsx

import { Scope, Site } from "@/types"

type SiteIndexProps = {

scope: Scope

sites: Site[]

}

function Index({ scope, sites }: SiteIndexProps) {

return (

<main>

{JSON.stringify(scope)} and also {JSON.stringify(sites)}

</main>

)

}

export default Index

Now, I’m thinking a user must have at least their base website, which is their username. They can’t delete it, they can only delete their account to delete it.

To create this website we need to create a function which creates both a website and membership. Then we need to call that function when a user signs up.

# lib/project/hosting.ex

def create_membership(%Scope{} = scope, attrs) do

with {:ok, membership = %Membership{}} <-

%Membership{}

|> Membership.changeset(attrs, scope)

|> Repo.insert() do

{:ok, membership}

end

end

def create_site(%Scope{} = scope, attrs) do

with {:ok, site = %Site{}} <-

Site.changeset(%Site{}, attrs, scope) |> Repo.insert(),

{:ok, _membership} <- create_membership(scope, %{role: :admin, site_id: site.id}) do

broadcast(scope, {:created, site})

{:ok, site}

end

endYou can ignore the broadcast() function. I’m not sure if we’re actually going to use it or not. The only purpose I can think of right now is if we connect a socket to each user and add notifications, like when someone “joins” the site, creates a file, updates a website in real time, etc. Hard to say if it’d be worth it.

Anyway, to tie it all together:

# lib/project/accounts.ex

# ...

def register_user_with_site(user_attrs, site_attrs) do

Repo.transaction(fn ->

{:ok, user} = register_user(user_attrs)

scope = Scope.for_user(user)

{:ok, _site} = Hosting.create_site(scope, site_attrs)

user

end)

end

# ...And now we just need to call it on registration.

# lib/project_web/controllers/user_registration_controller.ex

# ...

def create(conn, %{"user" => user_params}) do

case Accounts.register_user_with_site(user_params, %{

"subdomain" => "test",

"name" => "my epic site"

}) do

{:ok, user} ->

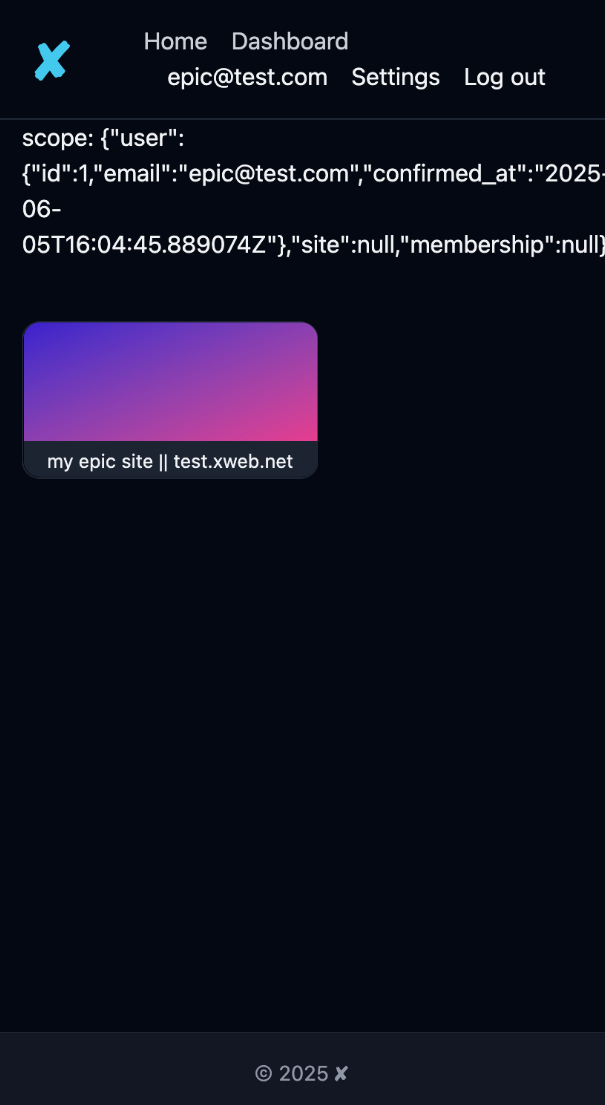

# ...Cool. We’ll need to update the registration page to accept a username slot. Let’s sign up and finally visit our Sites/Index.tsx page.

Before that, we can at least add a card.

// assets/js/pages/Sites/Index.tsx

import { Scope, Site } from "@/types"

type SiteIndexProps = {

scope: Scope

sites: Site[]

}

function SiteCard({ site }: { site: Site }) {

return (

<div className="rounded-xl w-fit overflow-hidden border border-gray-800 flex flex-col">

{/* This will be an image later */}

<div className="h-20 bg-gradient-to-br from-indigo-700 to-pink-500" />

<div className="bg-gray-800">

<small className="px-4">

{site.name} || {site.subdomain}.xweb.net

</small>

</div>

</div>

)

}

function Index({ scope, sites }: SiteIndexProps) {

return (

<main>

scope: {JSON.stringify(scope)}

<div className="flex gap-10 mt-10">

{sites.map((site) => (

<SiteCard site={site} key={site.id} />

))}

</div>

</main>

)

}

export default IndexAnd there we are:

To make sure the scope is available on every request, I created a separate plug:

# lib/project_web/user_auth.ex

# ...

def load_in_shared_props(conn, _opts) do

conn

|> assign_prop(:scope, conn.assigns.current_scope)

end

# ...Great. Anyway, now that we can have multiple websites, let’s make it so clicking on one of the site cards brings you immediately to the editor. We will probably add a “site dashboard” view later, which then has a link to the editor. But I just want to get the core function working first.

Okay. So let’s modify that route to the Editor and have it scoped to per website.

# lib/project_web/router.ex

# ...

scope "/sites/:subdomain", ProjectWeb do

pipe_through [:browser, :require_authenticated_user]

get "/editor", EditorController, :editor

end

# ...The reason we’re creating an EditorController instead of using the SiteController is because editing the files of the website is technically different than editing the metadata of the website itself. It could potentially be renamed to FileController, depending.

Cool, now all we need to do is add a link to the new route.

function SiteCard({ site }: { site: Site }) {

return (

<div className="rounded-xl w-fit overflow-hidden border border-gray-800 flex flex-col">

{/* This will be an image later */}

<div className="h-20 bg-gradient-to-br from-indigo-700 to-pink-500" />

<div className="bg-gray-800">

<small className="px-4">

{site.name} ||

<a className="ml-1" href={`/sites/${site.subdomain}/editor`}>

edit →

</a>

</small>

</div>

</div>

)

}And now when we click on this route, we have one more plug to install:

# lib/project_web/user_auth.ex

def assign_site_to_scope(conn, _opts) do

with %{"subdomain" => subdomain} <- conn.params,

%Scope{} = current_scope <- conn.assigns.current_scope,

%Site{} = site <- Project.Hosting.get_site_by_subdomain(current_scope, subdomain),

%Membership{} = membership <- Project.Hosting.get_membership(current_scope, site) do

updated_scope =

current_scope

|> Scope.put_site(site)

|> Scope.put_membership(membership)

assign(conn, :current_scope, updated_scope)

else

_ ->

flash =

if conn.params["subdomain"],

do: "You don't have access to that site.",

else: "Website not found!"

conn

|> put_flash(:error, flash)

|> redirect(to: ~p"/sites")

|> halt()

end

endSo whenever we have a route that has :subdomain in it, we want to see which site we’re dealing with and whether we have proper access.

Plug that into the router pipeline and we’re good to go:

# lib/project_web/router.ex

pipeline :browser do

# ...

plug :fetch_current_scope_for_user

plug :assign_site_to_scope

plug :load_in_shared_props

# ...

endA pipeline is just a group of plugs.

Now when we click on the site card, it brings us to its editor page. Cool!

Now it’s time to make it so we can actually edit the website’s files.

It’s time to add Hosting.File to the mix.

Files

So let’s begin and create the files.

mix phx.gen.schema Hosting.File files \

path:string \

mime_type:string \

size:integer \

site_id:references:website Cool. Let’s fix up the schema.

defmodule Project.Hosting.File do

use Ecto.Schema

import Ecto.Changeset

schema "files" do

field :path, :string

field :mime_type, :string

field :size, :integer, default: 0

belongs_to :site, Project.Hosting.Site

timestamps(type: :utc_datetime_usec)

end

@doc false

def changeset(file, attrs, scope) do

file

|> cast(attrs, [:path, :mime_type, :size])

|> validate_required([:path, :mime_type, :size])

|> put_change(:site_id, scope.site.id)

end

endI know that I may want to use bigint instead of integer for the file size, because you only have a max of 2gb. I’ll think about it as I approach deployment, however soon that is.

Onto a few edits in the migration:

defmodule Project.Repo.Migrations.CreateFiles do

use Ecto.Migration

def change do

create table(:files) do

add :path, :string, null: false

add :mime_type, :string, null: false

add :size, :integer, null: false

add :site_id, references(:site, on_delete: :delete_all), null: false

timestamps(type: :timestamptz)

end

create unique_index(:files, [:path])

create index(:files, [:site_id])

end

endAdded a unique index, though I’m not sure if we’d query by path just yet. I guess we would. It’ll be fine.

This would be a lot easier if, of course, we’d store everything in Postgres. But storing them as actual files means we don’t need to get a heavy server with beefy storage.

mix ecto.migrateSo now we can store what files a website has and get their paths to load. In our Editor controller, we’ll want to load the file selected. The question is how the file is selected? We could default to a index.html. That’d have to be created with the website, probably.

We can figure out the file selection/tree later. Let’s just hardcode in index.html and use that. I guess we don’t need the %File{} struct just yet, but soon.

So if we already have index.html, then we need to fetch that from our storage provider. So let’s create a Storage module and then an adapter, Local.

Here’s our adapter:

# lib/project/storage/adapter.ex

defmodule Project.Storage.Adapter do

@callback save(String.t(), binary()) :: :ok | {:error, term()}

@callback load(String.t()) :: {:ok, binary()} | {:error, term()}

@callback delete(String.t()) :: :ok | {:error, term()}

endAnd then in our main interface:

# lib/project/storage.ex

defmodule Project.Storage do

@behaviour Project.Storage.Adapter

@impl true

def save(path, content), do: adapter().save(path, content)

@impl true

def load(path), do: adapter().load(path)

@impl true

def delete(path), do: adapter().delete(path)

def adapter, do: Application.fetch_env!(:project, :storage)[:adapter]

endThe @impl true adds compile time checks on whether we’re actually implementing a behaviour.

We can now configure it through our config.exs (which we’ll later split out with a backblaze implementation).

# config/config.exs

config :project, :storage,

adapter: Project.Storage.Local,

base_path: Path.expand("priv/storage")Now, finally, we can create the Local adapter. All we’re doing is calling the built in File module.

defmodule Project.Storage.Local do

@behaviour Project.Storage.Adapter

defp base_path do

Application.fetch_env!(:project, :storage)[:base_path]

end

@impl true

def save(path, content) do

full_path = Path.join(base_path(), path)

File.mkdir_p!(Path.dirname(full_path))

File.write(full_path, content)

end

@impl true

def load(path) do

full_path = Path.join(base_path(), path)

case File.read(full_path) do

{:ok, content} -> {:ok, content}

error -> error

end

end

@impl true

def delete(path) do

full_path = Path.join(base_path(), path)

File.rm(full_path)

end

endCan you spot the issue?

We aren’t accounting for website. So we need to update these functions to include the %Scope{} so we can select the website’s directory to save everything. We don’t want to save a file to another website.

But I just want to save and load a file. We’ll fix this later.

Back to our Editor controller:

10 minutes later

I realized that I probably shouldn’t call this module File because it collides with Elixir’s built in module, File.

Though I can technically “shadow” it and the compiler will do fine, it’s probably for the better to rename it.

The split was between Document or SiteFile but Document doesn’t feel like it conveys things like .css or .js. So SiteFile it is.

This stuff is sorta irrelevant right now, seeing as we just want to load and save index.html. To do that we’ll hardcode it.

Hour of fiddling

So here’s what I had to do.

First, I needed to fix our authentication flow.

To do so I needed to split up &assign_site_to_scope/2.

Basically there was an infinite loop of redirects because the :browser pipeline covers unauthenticated routes too.

So if we can’t find a website, we’ll just let the plug pipeline continue:

# lib/project_web/user_auth.ex

def assign_site_to_scope(conn, _opts) do

with %{"subdomain" => subdomain} <- conn.params,

%Scope{} = current_scope <- conn.assigns.current_scope,

%Site{} = site <- Project.Hosting.get_site_by_subdomain(current_scope, subdomain),

%Membership{} = membership <- Project.Hosting.get_membership(current_scope, site) do

updated_scope =

current_scope

|> Scope.put_site(site)

|> Scope.put_membership(membership)

assign(conn, :current_scope, updated_scope)

else

_ -> conn

end

endAnd now we explicitly check if there is a website a user has membership to, otherwise we redirect back to the site portal.

# lib/project_web/user_auth.ex

@doc """

Plug for routes that require the user to have access to the site.

"""

def require_site_access(conn, _opts) do

if get_in(conn.assigns.current_scope.membership) do

conn

else

flash =

if conn.params["subdomain"],

do: "You don't have access to that site.",

else: "Website not found!"

conn

|> put_flash(:error, flash)

|> redirect(to: ~p"/sites")

|> halt()

end

endAnyway, let’s go hardcode index.html into our EditorController.

# lib/project_web/controllers/editor_controller.ex

defmodule ProjectWeb.EditorController do

alias Project.Storage

use ProjectWeb, :controller

def editor(conn, _params) do

contents =

case Storage.load("index.html") do

{:error, _} -> ""

{:ok, contents} -> contents

end

conn

|> render_inertia("Editor", %{buffer: contents})

end

def save(conn, %{"buffer" => buffer} = _params) do

scope = conn.assigns.current_scope

Storage.save("index.html", buffer)

conn

|> redirect(to: ~p"/sites/#{scope.site.subdomain}/editor")

end

endSo when we visit the editor, we’ll load the index.html buffer in by default. And when we save, well, there’s only one file to save, index.html, so save that.

A cool thing about InertiaJS is that redirecting to the same page will merge props but not necessarily unmount the components, just update the props we got. Pretty cool!

All we need to do is now consume this buffer.

type EditorProps = {

buffer: string

scope: Scope

}

function Editor({ buffer, scope }: EditorProps) {

const [value, setValue] = useState(buffer)

const onChange = useCallback((val: string, _viewUpdate: ViewUpdate) => {

// This is where we extract the buffer contents from Codemirror.

setValue(val)

}, [])

const saveBuffer = useForm()

saveBuffer.transform((_data) => ({ buffer: value }))

function save() {

saveBuffer.post(`/sites/${scope!.site!.subdomain}/editor`)

}

// ...

return (

<main>

<button className="px-2 py-1 bg-purple-700 text-white rounded-md" onClick={() => save()}>

Save

</button>

<CodeMirror

value={value}

// ... */

onChange={onChange}

// ... */

/>

</main>

)

}Let’s see:

It works! Let’s revisit what was the V1 objective and what needs to be done.

Looking everything over, there is a lot more to be done.

I’m trying to think of the next interesting goal to go for though.

The obvious thing to do would be to expand the SiteFile stuff more, remove the hard coding, and make it all work with two user-owned sites.

I think the next big, “this is cool to aim for” would be also visiting the subdomain and seeing the site render as well. Basically cleaning up the UI and patching these bleedovers of scope sounds like a good idea.

Alright, let’s just get multiple files working and go from there.

First, we need to fix the Storage scoping to isolate files according to the website. Let’s add the scope in front of all the callbacks:

# lib/project/storage/adapter.ex

defmodule Project.Storage.Adapter do

alias Project.Accounts.Scope

@callback save(%Scope{}, String.t(), binary()) :: :ok | {:error, term()}

@callback load(%Scope{}, String.t()) :: {:ok, binary()} | {:error, term()}

@callback delete(%Scope{}, String.t()) :: :ok | {:error, term()}

endAnd now we can update the local adapter.

defmodule Project.Storage.Local do

@behaviour Project.Storage.Adapter

defp base_path do

Application.fetch_env!(:project, :storage)[:base_path]

end

defp full_path(scope, path) do

base_path()

|> Path.join(scope.site.subdomain)

|> Path.join(path)

end

@impl true

def save(scope, path, content) do

full_path = full_path(scope, path)

File.mkdir_p!(Path.dirname(full_path))

File.write(full_path, content)

end

@impl true

def load(scope, path) do

full_path = full_path(scope, path)

case File.read(full_path) do

{:ok, content} -> {:ok, content}

error -> error

end

end

@impl true

def delete(scope, path) do

full_path = full_path(scope, path)

File.rm(full_path)

end

endI updated the call sites within EditorController to also pass in the scope.

Let’s test that it saves it to the subdomain directory.

It works.

Now let’s make it so we can switch between files. Create files too.

Two hours later

I did a lot of research on how people implement these IDEs in the browser. Lotsa cool technology, but we’re just a simple static-file webhoster, I think.

There was a “social” website builder called glitch.com and they had to shutdown. Because it’s more expensive, maybe. But it’s also like, not that fun to do ad-hoc nodejs server programming in the browser unless you’re trying to learn something. When in the world would you need these things to make a website that allows you to express yourself?

I mean, I can’t think of anything at all. All you need is HTML and CSS. Introducing a server and a runtime, it’s cool but it’s like what would you actually build with this? and when you think of all the people who have a website I mean seriously, there’s no need.

Think of it this way: the point of a server is to store data, create new data to sell, essentially, in my baseless reality. Store data from other people, and maybe your own data, possibly. But in the first case, what data do you need from other people? Just go to their website. And in the second case, making like a frictionless notetaking or whatever, I don’t know, you’d be better off using the dedicated consumer apps the corpos made.

Okay, anyway, cool thing about using React is you get all of these crazy UI things out of the box. Looking at these online IDEs — and not to imply I’ll ever match them — there’s a lot of things that’d probably take a lot of time, but not with React if you crash out on downloading all the libraries.

Well, for starters, let’s list the files a website has and load a tree view next to the editor. We’ll need to create a &list_site_files/1 function.

# lib/project/hosting.ex

def list_site_files(%Scope{} = scope) do

from(f in SiteFile)

|> where([f], f.site_id == ^scope.site.id)

|> Repo.all()

endLet’s update the action within the editor controller.

# lib/project_web/controllers/editor_controller.ex

def editor(conn, _params) do

scope = conn.assigns.current_scope

files = Hosting.list_site_files(scope)

contents =

case Storage.load(scope, "index.html") do

{:error, _} -> ""

{:ok, contents} -> contents

end

conn

|> render_inertia("Editor", %{files: files, buffer: contents})

endI suppose the initial render will always have index.html as the selected buffer, perhaps. Onward to the editor page.

type SiteFile = {

path: string

}

type EditorProps = {

buffer: string

scope: Scope

files: SiteFile

}

function Editor({ buffer, scope, files }: EditorProps) {

console.log("files", files)

// ...

}Looking at the web console:

files Array [] Editor.tsx:51:10It’s expected the array of SiteFiles is empty.

We technically don’t have any files recorded in the database, since we were hardcoding index.html.

Anyway, let’s grab a tree file viewer from React land.

npm install @headless-tree/core @headless-tree/react classnamesTwo hours later

Okay, I guess I’m not going to use this package because it’s causing issues with my bundling.

Which is fine, anyway, because I found another package.

npm install react-arboristLook how much simpler the example looks!

// assets/js/pages/Editor.tsx

import { Tree, type NodeRendererProps } from "react-arborist"

type DataNode = {

id: string

name: string

children?: DataNode[]

}

const data: DataNode[] = [

{ id: "1", name: "Unread" },

{ id: "2", name: "Threads" },

{

id: "3",

name: "Chat Rooms",

children: [

{ id: "c1", name: "General" },

{ id: "c2", name: "Random" },

{ id: "c3", name: "Open Source Projects" },

],

},

{

id: "4",

name: "Direct Messages",

children: [

{ id: "d1", name: "Alice" },

{ id: "d2", name: "Bob" },

{ id: "d3", name: "Charlie" },

],

},

]

function SideBar() {

return (

<Tree initialData={data}>

{({ node, style, dragHandle }: NodeRendererProps<DataNode>) => (

<div

style={{ ...style, paddingLeft: node.level * 20, display: "flex", alignItems: "center" }}

onClick={() => node.toggle()}

ref={dragHandle}

>

{node.isLeaf ? "🌿" : node.isOpen ? "📂" : "📁"} {node.data.name}

</div>

)}

</Tree>

)

}

function Editor({ buffer, scope, files }: EditorProps) {

// ...

return (

<main>

<button className="px-2 py-1 bg-purple-700 text-white rounded-md" onClick={() => save()}>

Save

</button>

<SideBar /> {/* <- */}

<CodeMirror

value={value}

height="200px"

extensions={extensions}

onChange={onChange}

theme={"dark"}

indentWithTab={false}

/>

</main>

)

}And now we get dragging out of the box.

Now we need an ability to add files and display all the files a site has.

Hour of contemplation

I don’t really want to use other people’s packages all that much. Why not just implement it ourselves? Grabbing a package to show a tree view seems like too much.

There’s always a cost when dealing with other people’s code, bloating one’s dependencies. Sometimes reinventing the wheel is the surest way you don’t have to repair it twenty times later down the line.

So I uninstalled that one too.

We will make our own tree view, but for today I will just list the paths linearly on the side.

30 more minutes of contemplation

I think this is one of the main downsides of having to use JavaScript. There are so many options and ways to go about this. It sucks the joy out a little.

Alright, just need to get this done. The current thing I keep dodging around is creating files.

So let’s just list all files in a sidebar and then create.

I added some UI for listing the files since we already pass them down to the React component.

Let’s create a file.

# lib/project/hosting.ex

def create_site_file(%Scope{} = scope, path) do

with :ok <- Storage.save(scope, path, ""),

{:ok, file} <-

%SiteFile{path: path, mime_type: "text/html"}

|> SiteFile.changeset(%{}, scope)

|> Repo.insert_or_update() do

{:ok, file}

else

_ -> :error

end

endThis could look a lot better. I’m not sure I like the defaults around the changeset functions. We’ll see.

Anyway, we first save the file, and if it saves, we want to also record it in the database. We do insert_or_update because I’m not yet sure how to handle side effects.

Also, we could probably change this to just save_site_file and have it cover both the create & updates of a file. Let’s first make it so we can switch buffers and then do that.

Let’s add a create route in the router.ex.

# lib/project_web/router.ex

# ...

scope "/sites/:subdomain", ProjectWeb do

pipe_through [:browser, :require_authenticated_user, :require_site_access]

get "/editor", EditorController, :editor

post "/editor", EditorController, :save

post "/editor/files", EditorController, :create

end

# ...Again, the route naming could be better here. It may even be a good idea to create a SiteFile controller, depending.

Hours later

I debated whether I even needed a SiteFile struct and table. Having to sync between B2 and this database seems like much, but let’s just go with it.

Another issue I ran into was that the SiteFile has no way to represent a directory if all :path attributes point to a file.

The cool thing though is that backblaze does the same thing by adding a .bzEmpty to “directories” they have. I can do the same thing, and then hide those on the frontend.

Lastly I looked into MIME types a bit more to understand what to do about them. For now let’s just restrict all files to known extensions. I think, in general, we’ll rely on the extensions to determine MIME type. MIME type let’s the browser know how to render files.

Okay, with that all out of the way, let’s get over this chapter 1 finish line and make it so we can create/delete files.

Four hour pause

I tried taking a break but it seems my brain doesn’t like to do anything else on the computer. It makes me paranoid more than anything, and then I wonder whether making this website will just further the paranoia.

Anyway, what needs to be done? I have the files listed in a flat list (needs to be a tree list later… but put it in the backlog). With that file list, well, I can’t actually click on the file to switch the buffer. Why don’t we do that next?

Hour of fiddling

So I built some of the functionality with Inertia, but I think the Editor page needs to just be a full-on SPA experience. I mean, this is the whole reason I chose Inertia in the first place: far more convenient of an escape hatch because we’re already in Javascript land.

Part of the reason why is because I want to mimic VSCode as much as possible, and having tabs and unsaved buffers and everything else, and keeping it client side, etc, it just makes more sense to keep it as “one experience”. The current implementation I have is replacing the URL by selected file type. I’m sure there’s a way to design this with Inertia completely, but it doesn’t seem that clear and I’m not sure what we get out of it. Basically I want to save and delete and create stuff without changing the URL or having to load in all the props again.

For example, I would load all the files on initial render, but now if someone visits editor/my_new_cool_file.html in another page, and I don’t load the props in that controller action too, then it fails. So I basically have to fetch all the files on every single action. Which, of course, can easily be done, since you can throw a function anywhere in the plug pipeline, but it’s indicative of a larger issue.

Besides, as a plus, by making this more “SPA” territory, when we start exploring collaborative coding and websockets, well, we definitely don’t want to be using Inertia there.

Anyway, let’s start consolidating state actions for our yet-to-be SPA.

npm i zustandLet’s make a “store” or a place to hold and manipulate the state of buffers.

type FileBuffer = {

path: string

savedContent: string

unsavedContent: string

isDirty: boolean

}

type EditorState = {

openFiles: FileBuffer[]

activePath: string | null

subdomain: string | null

setSubdomain: (val: string) => void

openFile: (file: { path: string; content: string }) => void

closeFile: (path: string) => void

setActivePath: (path: string) => void

updateBuffer: (path: string, newContent: string) => void

saveFile: (path: string) => Promise<void>

}Hour of fiddling

It’s been awhile since I’ve used React, and honestly I don’t think I’ve used zustand before. Anyway, I ran into infinite render issues, as well as figuring out how to actually structure the store.

Even now I think there is a more clear way to structure the store, but that shall wait for another day.

Additionally, I think these controller actions can be more clear and have better error handling. I even think the Storage ought to be called from Hosting to make things easier. The structure will get clear with more time:

# lib/project_web/controllers/file_controller.ex

defmodule ProjectWeb.FileController do

alias Project.Storage

alias Project.Hosting

use ProjectWeb, :controller

def open(conn, %{"path" => path} = _params) do

scope = conn.assigns.current_scope

case Hosting.get_site_file_by_path(scope, Enum.join(path, "/")) do

nil ->

conn

|> put_status(404)

|> json(%{status: "error", error: "That file doesn't exist!"})

file ->

contents =

case Storage.load(scope, file.path) do

{:error, _} -> ""

{:ok, contents} -> contents

end

conn

|> json(%{status: "ok", content: contents})

end

end

def save(conn, %{"content" => content, "path" => path} = _params) do

scope = conn.assigns.current_scope

path = Enum.join(path, "/")

case Hosting.get_site_file_by_path(scope, path) do

nil -> Hosting.create_site_file(scope, path)

_ -> :ok

end

:ok = Storage.save(scope, path, content)

conn

|> json(%{status: "ok", content: content, message: "Successfully saved."})

end

endAnd of course we need to update the router:

# lib/project_web/router.ex

scope "/api/sites/:subdomain", ProjectWeb do

pipe_through [:browser, :require_authenticated_user, :require_site_access]

post "/files/*path", FileController, :save

get "/files/*path", FileController, :open

endI would show you the React code but it looks more putrid than the controller code so let’s just skip it.

In any case! Check it out:

Cool. Now we need a way to create and delete files. We can also add active tabs, too.

But I think this is a good checkpoint. Though we intended to get the rest of the file stuff done this chapter, that’s fine. We will complete the above in the next chapter. We’re almost at 10k words, even if half is composed of code blocks. It doesn’t seem like much, but it’s a start.

If you actually read this entire thing, thanks for your time and well let me know if you have any questions or requests, whether in what’s written or building this website. Cheers!